Last Updated on May 23, 2024 2:24 pm by Laszlo Szabo / NowadAIs | Published on May 23, 2024 by Laszlo Szabo / NowadAIs

Dual 3D: 1 Minute Text to 3D Model Generation with AI – Key Notes

- Dual 3D: A new framework for text-to-3D generation that combines efficiency, consistency, and high quality.

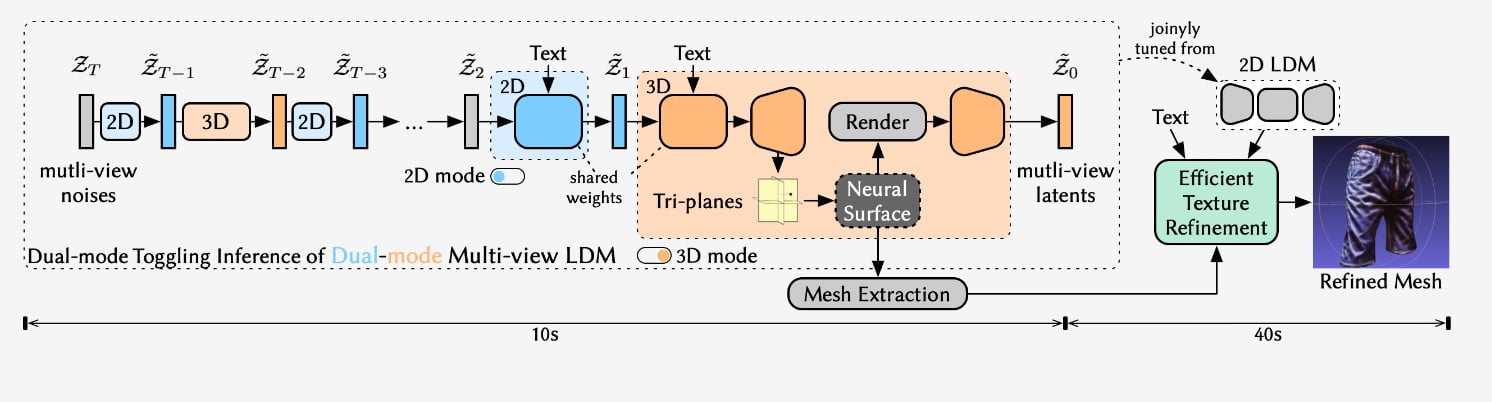

- Dual-mode architecture: Uses 2D mode for denoising and 3D mode for consistent rendering.

- Dual-mode toggling inference: Reduces rendering costs and generation time to 10 seconds.

- Diverse generation: Produces a wide range of 3D assets from various textual descriptions.

Introduction

In the ever-evolving landscape of digital content creation, the ability to seamlessly translate textual descriptions into immersive 3D experiences has become a coveted skill. Dual3D, a new framework, offering a novel approach to text-to-3D generation that combines efficiency, consistency, and exceptional quality.

The Dual-Mode Advantage

The core innovation behind Dual3D lies in its dual-mode architecture, which leverages the synergistic interplay between two distinct modes: the 2D mode and the 3D mode. The 2D mode efficiently denoises the noisy multi-view latents, while the 3D mode generates a tri-plane neural surface for consistent rendering-based denoising. This dual-mode approach not only streamlines the generation process but also ensures the resulting 3D assets maintain a high level of visual coherence.

Tapping into Pre-Trained Expertise

To circumvent the costly process of training from scratch, Dual3D’s architects have cleverly tuned various modules from a pre-trained text-to-image latent diffusion model. This strategic move not only accelerates the development timeline but also leverages the wealth of knowledge and expertise embedded in the pre-trained model, resulting in a more robust and capable text-to-3D generation framework.

Dual-Mode Toggling Inference

One of the key challenges in text-to-3D generation is the high rendering cost during inference. Dual3D’s researchers have ingeniously addressed this issue by introducing the dual-mode toggling inference strategy. This innovative approach allows the framework to use only one-tenth of the denoising steps in the 3D mode, while still maintaining the exceptional quality of the generated 3D assets. The result is a staggering 10-second generation time, a remarkable feat in the realm of text-to-3D generation.

Texture Refinement: The Final Touch

To further enhance the visual appeal of the generated 3D assets, Dual3D incorporates an efficient texture refinement process. This additional step ensures that the textures of the 3D models are polished and refined, seamlessly blending with the overall aesthetic of the generated content.

Diverse Generation: Catering to Varied Preferences

The versatility of Dual3D is further exemplified by its capacity to generate a wide array of 3D assets, catering to diverse preferences and creative visions. From everyday objects to fantastical creations, the framework’s generative capabilities know no bounds, empowering users to bring their imaginative textual descriptions to life.

Fine-Grained Generation: Attention to Detail

Dual3D’s prowess extends beyond the creation of broad categories; it also demonstrates its ability to generate highly detailed and specialized 3D models. Whether it’s a sleek supercar, a whimsical sushi-inspired vehicle, or a sophisticated piece of furniture, the framework’s fine-tuned generation capabilities ensure that every nuance and design element is meticulously captured.

Consistent Quality: A Hallmark of Dual3D

One of the standout features of Dual3D is its unwavering commitment to quality. Regardless of the complexity or scale of the 3D assets being generated, the framework consistently delivers visually stunning and coherent results. This consistency is a testament to the robust and well-engineered nature of the Dual3D system.

The Future of Dual3D: Endless Possibilities

As the field of text-to-3D generation continues to evolve, Dual3D stands at the forefront, poised to lead the charge. With its innovative dual-mode architecture, efficient generation processes, and unwavering commitment to quality, the framework holds the potential to redefine the way we conceptualize and interact with 3D content. The future of Dual3D is a testament to the boundless creativity and innovation that can be unleashed when text and 3D worlds converge.

Conclusion: Embracing the Dual3D Revolution

Dual3D’s arrival marks a transformative moment in the realm of digital content creation. By seamlessly bridging the gap between textual descriptions and captivating 3D worlds, this framework empowers users to unlock new realms of creative expression, changing the way we conceptualize and interact with AI and virtual environments.

Definitions

- Dual 3D Model: A framework that translates text descriptions into detailed 3D models using a dual-mode architecture.

- 3D Model: A digital representation of a three-dimensional object created using specialized software.

- Text-to-3D Generation Framework: A system that converts textual descriptions into 3D models, leveraging AI and machine learning techniques.

- Texture Refinement Process: A method to enhance the surface details and visual quality of 3D models, making them appear more realistic and polished.

Frequently Asked Questions

- What is Dual 3D? Dual 3D is an advanced framework designed to transform textual descriptions into high-quality 3D models. It uses a dual-mode architecture to achieve efficient and consistent 3D generation.

- How does Dual 3D maintain high visual quality? Dual 3D combines 2D mode for denoising with 3D mode for rendering to ensure seamless visual consistency. Additionally, it includes a texture refinement process to polish and enhance the final 3D models.

- What are the key features of Dual 3D? Key features of Dual 3D include dual-mode architecture, pre-trained model utilization, dual-mode toggling inference for faster generation, and the ability to create complex compositional scenes and highly detailed 3D assets.

- How quickly can Dual 3D generate 3D models? Thanks to its dual-mode toggling inference strategy, Dual 3D can generate high-quality 3D models in just 10 seconds, making it incredibly efficient for content creators.

- What types of 3D assets can Dual 3D create? Dual 3D can create a wide range of 3D assets, from everyday objects to intricate and imaginative creations. Its fine-grained generation capability ensures detailed and specialized models.