Last Updated on March 7, 2024 1:07 pm by Laszlo Szabo / NowadAIs | Published on March 7, 2024 by Laszlo Szabo / NowadAIs

Explore What Makes the New Stable Diffusion 3 Stand Out! – Key Notes:

- Stable Diffusion 3 introduces a diffusion transformer architecture, enhancing text-to-image model performance.

- It’s part of a family of models offering greater flexibility for text-based image generation.

- Users can access it through a membership program or API, with a waitlist for early previews.

- Notable improvements include better text generation, enhanced prompt following, and efficient speed and deployment.

- Focuses on generating safe-for-work images and includes artist opt-out options.

What is Stable Diffusion 3?

Announcing Stable Diffusion 3, our most capable text-to-image model, utilizing a diffusion transformer architecture for greatly improved performance in multi-subject prompts, image quality, and spelling abilities.

Today, we are opening the waitlist for early preview. This phase… pic.twitter.com/FRn4ofC57s

— Stability AI (@StabilityAI) February 22, 2024

Stable Diffusion 3 is the latest generation of text-to-image AI models developed by Stability AI. Unlike its predecessors, SD3 is not a single model but a family of models, ranging from 800M to 8B parameters. This diverse range of models allows for greater flexibility and customization in generating text-based images. The product design of SD3 follows the industry trend of large language models, where different-sized models cater to specific use cases.

To access Stable Diffusion 3, users can join the membership program offered by Stability AI, either for self-hosting or utilizing their powerful API. While the model is not publicly available yet, interested individuals can join the waitlist for an early preview of this AI technology.

Improvements in Stable Diffusion 3

According to research paper Stable Diffusion 3 brings several notable improvements compared to its predecessors. Let’s explore some of the key enhancements that make SD3 a game-changer in text-to-image generation.

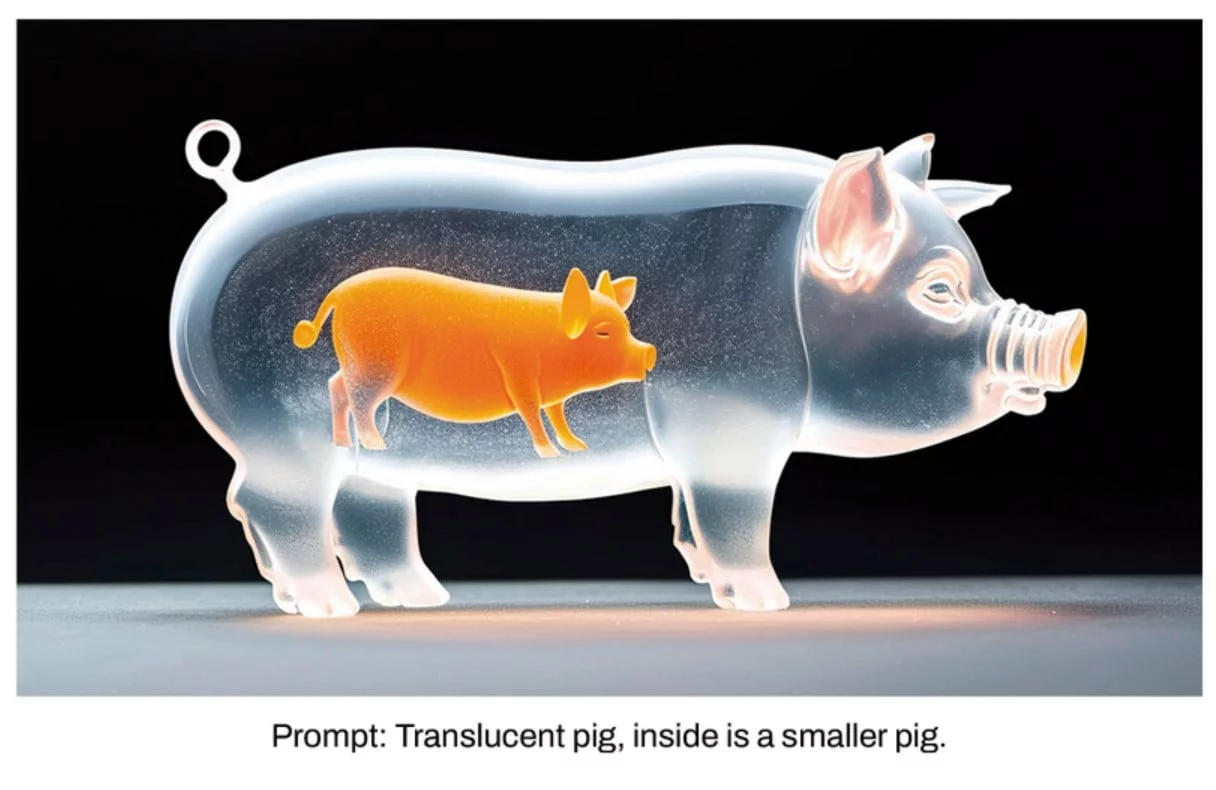

Better Text Generation

Previous versions of Stable Diffusion struggled with text rendering, particularly when it came to generating long sentences and diverse font styles. However, with Stable Diffusion 3, we witness significant advancements in this area. Sample images generated by SD3 showcase impressive long sentences with visually appealing font styles. This enhancement presents a substantial improvement over the previous models, such as Stable Diffusion XL and Stable Cascade, which often required extensive cherry-picking to achieve comparable results.

Enhanced Prompt Following

Prompt adherence is a crucial aspect of text-to-image generation models. Stable Diffusion 3 addresses this challenge by incorporating innovative techniques inspired by the highly accurate image captions used in DALLE 3. This new approach enables SD3 to closely follow the provided prompts. In user studies, Stable Diffusion 3 has demonstrated performance on par with DALLE 3 in terms of prompt-following capabilities. This improvement opens up exciting possibilities for generating images that align closely with the intended context.

Speed and Deployment

Stable Diffusion 3 offers impressive speed and deployment capabilities. The largest SD3 model, with 8B parameters, can be run locally on a video card with 24GB RAM. During initial benchmarking, it took only 34 seconds to generate a 1024×1024 resolution image using 50 sampling steps on an RTX 4090 video card. As the model continues to evolve and optimizations are implemented, we can expect further improvements in speed and efficiency. This scalability ensures that Stable Diffusion 3 remains accessible to a wide range of users, even on consumer hardware.

Safety Measures

Similar to other models in the Stable Diffusion lineup, Stable Diffusion 3 focuses on generating safe-for-work (SFW) images. This approach ensures that the model adheres to ethical guidelines and minimizes the risk of generating inappropriate content. Additionally, Stability AI allows artists to opt out of having their work included in the training process, further safeguarding their creations and reducing the chances of misuse.

What’s New in Stable Diffusion 3 Model?

Stable Diffusion 3 introduces several notable changes and features that enhance its overall performance and functionality. Let’s take a closer look at some of these advancements:

Noise Predictor

Stable Diffusion 3 adopts a different approach to noise prediction compared to its predecessors. Instead of using the U-Net noise predictor architecture, SD3 utilizes a repeating stack of Diffusion Transfers. This change brings the benefits associated with using transformers in large language models, providing predictable performance improvements as the model size increases. The architecture of the diffusion transformer block ensures that both the text prompt and the latent image play integral roles in the generation process. Moreover, this architecture paves the way for incorporating multimodal conditionings, such as image prompts, into the model.

Sampling Techniques

To ensure fast and high-quality image generation, the Stability team dedicated significant effort to studying sampling methods for Stable Diffusion 3. SD3 employs Rectified Flow (RF) sampling, which offers a direct and efficient path from noise to clear images. This approach allows for quicker generation while maintaining image quality. Additionally, a noise schedule that focuses more on the middle part of the sampling trajectory has been found to produce higher-quality images. These innovative sampling techniques enhance the overall performance and output of Stable Diffusion 3.

Improved Text Encoders

Text encoders play a crucial role in text-to-image generation models. Stable Diffusion 3 incorporates three different text encoders, each serving a specific purpose:

- OpenAI’s CLIP L/14

- OpenCLIP bigG/14

- T5-v1.1-XXL

These text encoders enhance the model’s understanding of textual input and contribute to more accurate and contextually aligned image generation. The inclusion of multiple encoders allows for greater flexibility and enables users to tailor the model’s behavior based on their specific requirements. The T5-v1.1-XXL encoder, although substantial in size, can be omitted if text generation is not a primary focus.

Enhanced Caption Generation

Caption quality significantly impacts prompt following in text-to-image models. Stable Diffusion 3 takes a cue from DALLE 3 and utilizes highly accurate captions in its training process. This approach ensures that the model excels in closely adhering to the provided prompts, resulting in more contextually relevant and visually appealing image outputs.

Conclusion

Stable Diffusion 3 represents a significant milestone in the field of text-to-image generation. With its family of models, ranging from 800M to 8B parameters, SD3 offers unparalleled flexibility and customization. The advancements in text generation, prompt following, speed, and safety measures make SD3 a powerful tool for various applications. By incorporating innovative techniques such as noise predictors, improved sampling, and enhanced text encoders, Stable Diffusion 3 pushes the boundaries of what is possible in the realm of AI-generated images.

As Stable Diffusion 3 continues to evolve, we can expect even more exciting developments in the field of text-to-image generation. By combining cutting-edge technology with ethical considerations, Stability AI sets the stage for AI models that not only excel in performance but also prioritize user safety and content integrity. With its potential to revolutionize creative workflows and empower artists, Stable Diffusion 3 heralds a new era in AI-assisted image generation.

For the latest updates on Stable Diffusion 3 and to explore the world of AI-generated art, follow Stability AI on Twitter, Instagram, and LinkedIn, or join their Discord community. Stay tuned for the official release of Stable Diffusion 3 and embark on a journey of limitless creativity!

Definitions:

- Stable Diffusion 3: A state-of-the-art text-to-image AI model developed by Stability AI, featuring a diffusion transformer architecture for enhanced performance in generating detailed and contextually accurate images.

- Stability AI: The company behind Stable Diffusion, dedicated to advancing AI technology with a focus on creating models that safely generate high-quality digital art and imagery.

- Text Encoders: AI components that convert text into a format understandable by machines, playing a critical role in interpreting textual prompts for image generation.

- DALLE 3: A model by OpenAI that generates images from textual descriptions, known for its precision in creating accurate and context-aware visuals from given prompts.

Frequently Asked Questions:

- What sets Stable Diffusion 3 apart from its predecessors? Stable Diffusion 3 utilizes a diffusion transformer architecture, offering significant advancements in multi-subject prompts, image quality, and spelling capabilities compared to earlier versions.

- How can I access Stable Diffusion 3? Access is provided through Stability AI’s membership program or a powerful API, with an early preview waitlist available for interested users.

- What improvements does Stable Diffusion 3 bring to text-to-image generation? It introduces better text generation, enhanced prompt adherence, and improved speed and deployment capabilities, setting new standards for AI-driven art creation.

- What safety measures does Stable Diffusion 3 implement? It emphasizes the generation of safe-for-work content and allows artists to opt out of having their work included in the training process to safeguard creations and minimize misuse risks.

- How does Stable Diffusion 3’s technology influence its performance? By adopting a diffusion transformer architecture and incorporating improved text encoders and sampling techniques, Stable Diffusion 3 achieves predictable performance improvements, making it a pioneering tool in AI-generated art.