Last Updated on January 29, 2024 9:57 am by Laszlo Szabo / NowadAIs | Published on January 29, 2024 by Laszlo Szabo / NowadAIs

GPT-4 Turbo & GPT-3.5 Turbo: Updated Kids on the Block – Key Notes

- GPT-4 Turbo: Enhanced AI model for improved performance and efficiency.

- Ethical AI: Introduction of an updated text moderation model for safer, more responsible content generation.

- Privacy Commitment: OpenAI’s decision not to use API data for model training.

- Microsoft Partnership: Collaboration with Microsoft for model scaling and infrastructure support.

- AI Evolution: Represents a shift towards more efficient, ethical, and versatile AI applications.

Updated Models and Ethics

OpenAI’s recent announcement of GPT-4 Turbo OpenAI continues to redefine the landscape of Artificial Intelligence, cementing its position as a leader in this field.

Let’s unpack what this means for the industry and, more importantly, for those of us who rely on these technologies.

BiTurbo Charging GPT: Smaller and Larger Text Embeddings

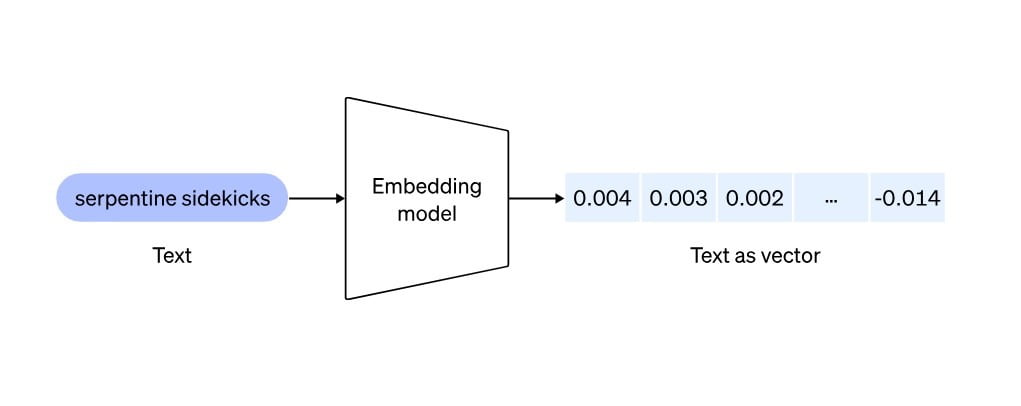

First off, let’s talk about OpenAI’s introduction of two new embedding models: text-embedding-3-small and text-embedding-3-large.

The smaller model is a game-changer for applications where efficiency is key. Imagine a world where smaller devices can offer advanced AI capabilities without breaking a sweat – that’s what we’re looking at here.

On the other hand, the larger model pushes the boundaries of performance, offering deeper and more nuanced understanding. In the words of OpenAI,

“These models provide a spectrum of trade-offs between cost, speed, and quality”.

A New Era for GPT-4 and GPT-3.5

Moving on to the stars of the show, GPT-4 Turbo and the new GPT-3.5 Turbo model.

These aren’t just your run-of-the-mill updates. We’re talking about a refined, more efficient version of the already powerful GPT-4 and GPT-3.5 models.

OpenAI claims that the GPT-4 Turbo is

“more performant”

and has been optimized for

“lower latency and higher throughput”

This means quicker responses and the ability to handle more requests, a significant improvement for businesses and developers relying on these models.

The Guardian of Ethics: Updated Text Moderation Model

In the realm of AI, with great power comes great responsibility.

Recognizing this, OpenAI has also released an updated text moderation model. This isn’t just a fancy add-on; it’s a crucial element in ensuring that the content generated by AI remains safe and appropriate.

OpenAI emphasizes that this model helps in

“identifying generated text that violates OpenAI’s use case policy”

It’s a step towards more ethical AI usage, balancing innovation with responsibility.

A Pledge for Privacy: No More Training on API Data

Here’s a bold move by OpenAI – they’ve committed to not using data sent to the OpenAI API for training or improving their models:

By default, data sent to the OpenAI API will not be used to train or improve OpenAI models.

In an era where data privacy concerns are sky-high, this decision stands out. It shows a commitment to user privacy and trust, a cornerstone in the relationship between AI providers and their users.

Balancing Act: Native Support for Shortening Embeddings

Lastly, OpenAI introduces native support for shortening embeddings in their models:

This feature allows users to find a sweet spot between performance and resource usage. It’s like having a dimmer switch for your AI’s brainpower – turn it up for complex tasks, or dial it down when efficiency is key.

OpenAI and Microsoft: A Symbiotic Relationship

It’s impossible to discuss OpenAI‘s advancements without mentioning Microsoft, their close partner.

Microsoft’s involvement has been instrumental in scaling these models. The synergy between OpenAI’s cutting-edge AI research and Microsoft’s robust infrastructure is a match made in tech heaven, driving forward the capabilities of generative AI.

In Perspective: The Future of Artificial Intelligence

So, what does all this mean for the future of Artificial Intelligence? We are witnessing a significant shift towards more efficient, ethical, and versatile AI models. The advancements in GPT-4 Turbo and its siblings are not just technical achievements; they represent a deeper understanding of what it means to integrate AI into our daily lives responsibly.

FAQ Section:

- What is GPT-4 Turbo?

GPT-4 Turbo is an advanced version of OpenAI’s AI model, optimized for higher performance, lower latency, and better throughput.

- How does GPT-4 Turbo differ from previous models?

It offers quicker responses and can handle more requests, significantly improving efficiency and performance over previous models.

- What role does Microsoft play in GPT-4 Turbo’s development?

Microsoft has been instrumental in scaling the model, combining OpenAI’s AI research with Microsoft’s robust infrastructure.

- What are the ethical considerations with GPT-4 Turbo?

OpenAI has released an updated text moderation model to ensure that content generated remains safe and appropriate.

- How does GPT-4 Turbo address data privacy?

OpenAI has pledged not to use data sent to their API for training or improving their models, emphasizing user privacy and trust.