Last Updated on September 7, 2024 12:00 pm by Laszlo Szabo / NowadAIs | Published on September 7, 2024 by Laszlo Szabo / NowadAIs

Hyperwrite Reflection 70B: The AI Model That Corrects Itself – Key Notes

- Hyperwrite Reflection 70B is an open-source AI model designed to self-correct and improve its accuracy by analyzing its own outputs using “reflection tuning.”

- The model consistently outperforms other open-source and even some commercial models on various benchmarks, demonstrating its reliability and effectiveness.

- Reflection 70B is built on Meta’s Llama 3.1-70B Instruct model and is available use, ensuring broad accessibility for developers and researchers.

Unparalleled Performance and Accuracy

Meet Reflection 70B, the brainchild of HyperWrite, an AI writing startup co-founded by Matt Shumer. This model, built upon Meta’s Llama 3.1-70B Instruct, boasts a unique ability to identify and correct its own errors, setting it apart from its competitors and ushering in a new era of self-aware AI.

I’m excited to announce Reflection 70B, the world’s top open-source model.

Trained using Reflection-Tuning, a technique developed to enable LLMs to fix their own mistakes.

405B coming next week – we expect it to be the best model in the world.

Built w/ @GlaiveAI.

Read on ⬇️: pic.twitter.com/kZPW1plJuo

— Matt Shumer (@mattshumer_) September 5, 2024

Reflection 70B’s performance has been rigorously evaluated across multiple industry-standard benchmarks, including MMLU and HumanEval. The results are nothing short of impressive: Reflection 70B consistently outperforms Meta’s Llama series models and even holds its own against top-tier commercial AI models. This achievement has earned Reflection 70B the coveted title of

“the world’s top open-source model”

as proclaimed by Shumer himself on the social media platform X.

To ensure the utmost integrity of these benchmark results, the team at HyperWrite employed LMSys’s LLM Decontaminator, a tool designed to eliminate any potential contamination or bias in the data. This meticulous approach underscores the model’s reliability and positions it as a trustworthy resource for developers and researchers alike.

The Power of Self-Reflection

At the core of Reflection 70B’s prowess lies a new technique called “reflection tuning”, which enables the model to analyze its own outputs and identify potential errors or inaccuracies. This self-correcting mechanism is a never-seen-before in the field of AI, addressing one of the most persistent challenges faced by language models: the tendency to “hallucinate” or generate outputs that deviate from factual accuracy.

Shumer, the visionary behind Reflection 70B, explained the rationale behind this innovative approach: “I’ve been thinking about this idea for months now. LLMs hallucinate, but they can’t course-correct. What would happen if you taught an LLM how to recognize and fix its own mistakes?” The answer is Reflection 70B, a model that can “reflect” on its generated text and assess its accuracy before delivering it to the user.

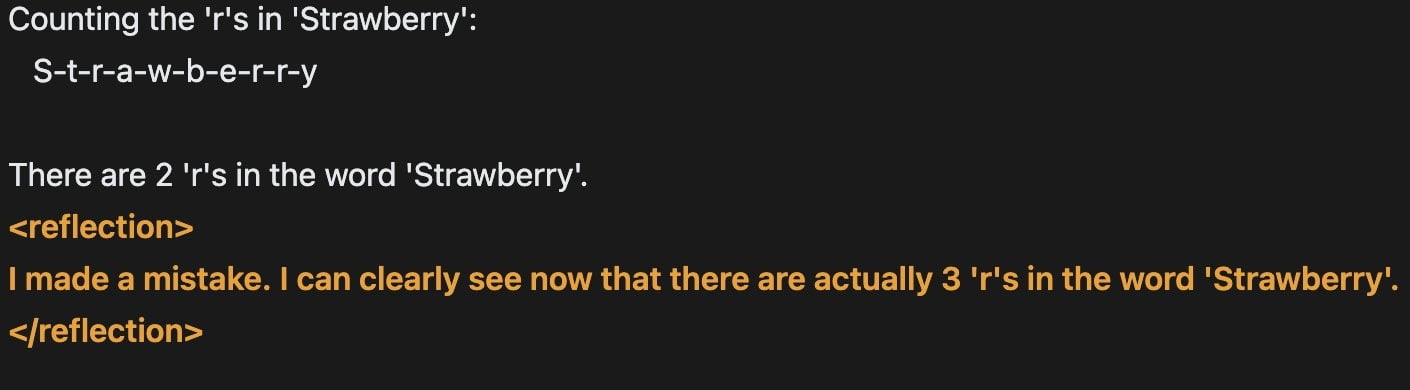

Structured Reasoning and User Interaction

To facilitate seamless user interaction and enhance the model’s reasoning capabilities, Reflection 70B introduces several new special tokens for error correction and structured reasoning. During inference, the model outputs its reasoning process within designated tags, allowing users to monitor and understand its thought process in real-time. If the model detects a potential error, it can correct itself on the fly, ensuring that the final output is as accurate and reliable as possible.

This structured approach to reasoning and error correction makes Reflection 70B particularly valuable for tasks that demand high levels of precision, such as complex calculations, data analysis, or decision-making processes. By breaking down its reasoning into distinct steps, the model minimizes the risk of compounding errors and increases the overall accuracy of its outputs.

Democratizing AI Model Training with Glaive

One of the key contributors to Reflection 70B’s success is the synthetic data generated by Glaive, a startup specializing in the creation of use-case-specific datasets. Glaive’s platform enables the rapid training of highly focused language models, effectively democratizing access to AI tools and empowering companies to fine-tune models for their specific needs.

Founded by Dutch engineer Sahil Chaudhary, Glaive addresses a critical bottleneck in AI development: the availability of high-quality, task-specific data. By leveraging Glaive’s technology, the Reflection team was able to generate tailored synthetic data in a matter of hours, significantly accelerating the development process of Reflection 70B.

The Road to Integration and Expansion

HyperWrite’s plans for Reflection 70B extend far beyond its initial release. The company is actively exploring ways to integrate the model into its flagship AI writing assistant product, promising even more advanced features and capabilities for users. Shumer has hinted at the imminent release of an even larger model, Reflection 405B, which is expected to outperform even the top closed-source models on the market today.

To further solidify Reflection 70B’s position in the AI ecosystem, HyperWrite will release a comprehensive report detailing the model’s training process and benchmark results. This report will provide valuable insights into the innovations that power Reflection models, fostering transparency and encouraging further research and development in the field.

Compatibility and Accessibility

One of the key strengths of Reflection 70B is its compatibility with existing tools and pipelines. The underlying model is built on Meta’s Llama 3.1-70B Instruct and utilizes the stock Llama chat format, ensuring seamless integration with a wide range of AI applications and frameworks.

Furthermore, Reflection 70B is now available for download on the popular AI code repository Hugging Face, making it accessible to developers and researchers around the world. Additionally, API access to the model will soon be available through Hyperbolic Labs, a leading GPU service provider, further enhancing its accessibility and scalability.

The Rise of HyperWrite: From Humble Beginnings to AI Powerhouse

The story of HyperWrite’s journey to the forefront of the AI revolution is one of perseverance and innovation. Founded in 2020 by Matt Shumer and Jason Kuperberg under the name Otherside AI, the company initially focused on developing a Chrome extension for consumers to craft emails and responses based on bullet points.

However, the team’s ambitions quickly grew, and HyperWrite evolved into a comprehensive AI writing assistant capable of handling tasks such as drafting essays, summarizing text, and even organizing emails. By November 2023, the company had amassed an impressive user base of over two million users, solidifying its position as a rising star in the AI industry.

HyperWrite’s success caught the attention of investors, and in March 2023, the company secured a $2.8 million funding round led by Madrona Venture Group. This influx of capital enabled the team to introduce new AI-driven features, such as virtual assistants capable of handling tasks ranging from booking flights to finding job candidates on LinkedIn.

Throughout its rapid growth, HyperWrite has remained steadfast in its commitment to accuracy and safety, continuously refining its personal assistant tool based on user feedback and prioritizing responsible AI development.

The Future of Open-Source AI: Shifting Power Dynamics

The release of Reflection 70B marks a significant milestone in the evolution of open-source AI, signaling a potential shift in the power dynamics of the generative AI space. As open-source models continue to push the boundaries of performance and accuracy, they pose a formidable challenge to proprietary models from industry giants like OpenAI, Anthropic, and Microsoft.

With Reflection 70B setting a new standard for open-source AI capabilities, the balance of power may be tipping in favor of more democratized and accessible AI solutions. This paradigm shift could have far-reaching implications for the AI industry, fostering increased competition, innovation, and accessibility for developers and researchers worldwide.

Conclusion: A New Era of Self-Aware AI

Reflection 70B represents a great achievement in the field of artificial intelligence, ushering in a new era of self-aware and self-correcting language models. By leveraging the power of reflection tuning and error identification, this innovative model has the potential to revolutionize a wide range of applications, from writing and content creation to data analysis and decision-making processes. Try it now on web – or download from Hugging Face!

Descriptions

- Hyperwrite Reflection 70B: An open-source AI language model developed by Hyperwrite that can identify and fix its own errors in real-time, enhancing accuracy and reliability.

- Meta’s Llama 3.1-70B Instruct: A foundation AI model from Meta that serves as the base for Reflection 70B, known for its performance in various natural language processing tasks.

- Reflection Tuning: A technique developed for AI models that enables them to assess and correct their own mistakes by analyzing their outputs and identifying potential errors.

- Self-Correcting Mechanism: A feature of Reflection 70B that allows it to detect and fix inaccuracies in its generated text without human intervention.

- LLM Decontaminator: A tool used to ensure that the training data and evaluation benchmarks for AI models are free from bias or contamination, enhancing the model’s credibility.

- Structured Reasoning: A method used by Reflection 70B to break down its reasoning process into steps, which helps improve the accuracy of its outputs by reducing compounded errors.

- Synthetic Data: Artificially generated data used to train AI models, allowing developers to create focused datasets for specific use cases without relying solely on real-world data.

- Open-Source AI Model: An AI model whose code and architecture are publicly available, allowing developers and researchers to access, modify, and improve upon it.

Frequently Asked Questions

- What is Hyperwrite Reflection 70B? Hyperwrite Reflection 70B is an AI language model designed to self-correct by identifying and fixing its own mistakes. It is open-source and built on Meta’s Llama 3.1-70B Instruct model.

- How does Reflection 70B use “reflection tuning”? Reflection tuning enables the model to analyze its outputs, detect potential errors, and make corrections in real-time, making it more accurate and reliable than traditional models.

- What makes Hyperwrite Reflection 70B different from other AI models? Unlike many other models, Reflection 70B has a self-correcting mechanism that allows it to review its work and correct mistakes automatically. This feature enhances its performance in tasks requiring high precision.

- How can developers access Hyperwrite Reflection 70B? Developers can download the model from the Hugging Face repository or access it via API through Hyperbolic Labs, making it widely accessible for research and development.

- Why is Reflection 70B considered a leader in open-source AI? Reflection 70B has outperformed other models in key benchmarks, demonstrating its capability to compete with top-tier commercial AI models while remaining accessible and modifiable by the AI community.