Last Updated on March 28, 2024 12:38 pm by Laszlo Szabo / NowadAIs | Published on March 28, 2024 by Laszlo Szabo / NowadAIs

MIT Breaking Speed Limits in Faster AI Image Generation: 30x Multiplier with DMD Framework – Key Notes:

- MIT researchers developed a novel method for faster high-quality AI image generation.

- Leveraging GANs and diffusion models, the technique accelerates image production by simplifying it to a single step.

- The Distribution Matching Distillation (DMD) framework significantly reduces computational time, maintaining or enhancing image quality.

- DMD achieves 30x faster generation compared to traditional diffusion models, without sacrificing visual content quality.

- The framework’s applications span design tools, drug discovery, and 3D modeling, promising efficiency in creative and scientific fields.

MIT’s Faster AI Image Generation Method

AI technology has revolutionized various industries, including image generation.

In recent years, researchers at the Massachusetts Institute of Technology (MIT) have made significant advancements in the field by developing a novel method that accelerates the process of generating high-quality images using AI. This technique simplifies the image generation process to a single step, while maintaining or even enhancing the image quality.

By leveraging the principles of generative adversarial networks (GANs) and diffusion models, this new approach has the potential to transform the speed and quality of AI image generation.

The Complexity of Image Generation

Traditionally, generating high-quality images using AI has been a complex and time-intensive process. Diffusion models, which iteratively add structure to a noisy initial state until a clear image or video emerges, have gained popularity in recent years. However, these models require numerous iterations to perfect the image, leading to significant computational time.

MIT researchers from the Computer Science and Artificial Intelligence Laboratory (CSAIL) have introduced a new framework known as Distribution Matching Distillation (DMD) that simplifies the multi-step process of traditional diffusion models into a single step. This framework involves teaching a new computer model to mimic the behavior of more complicated, original models that generate images. By doing so, the DMD approach achieves image generation that is 30 times faster than previous diffusion models, while retaining or surpassing the quality of the generated visual content.

The DMD Framework: A Teacher-Student Model

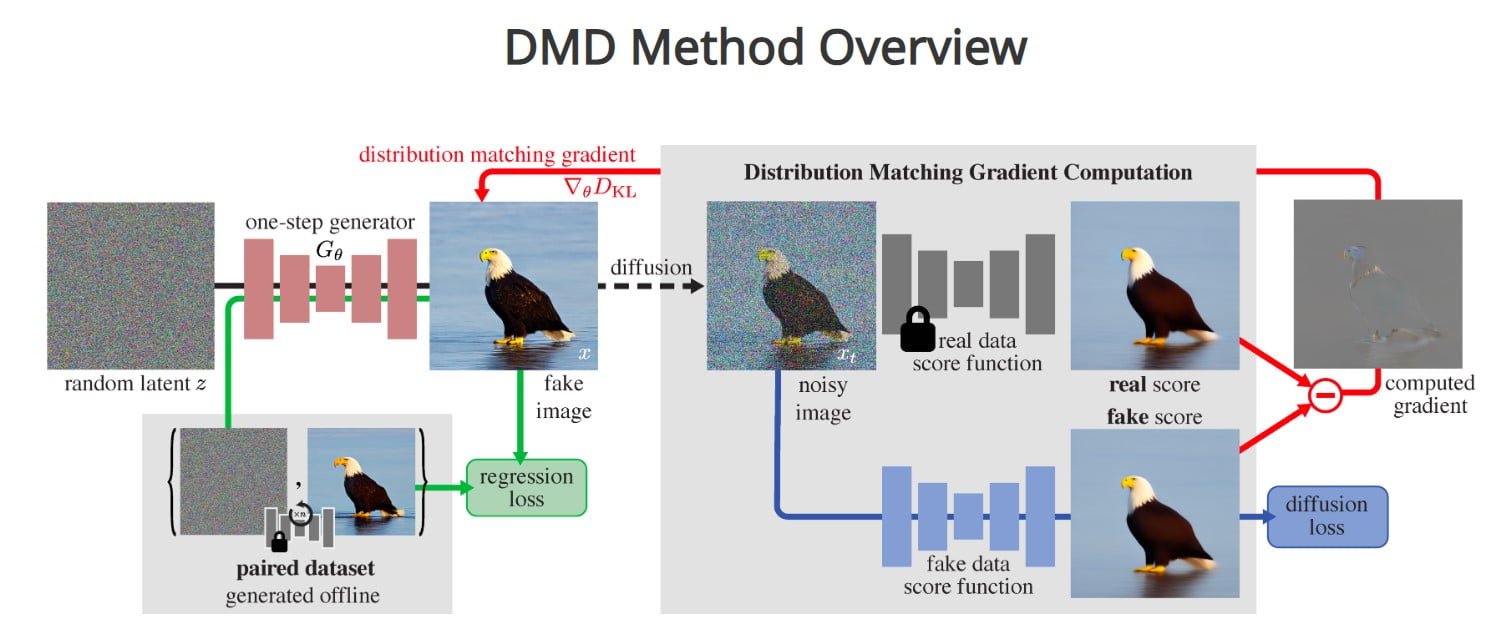

The DMD framework developed by MIT researchers cleverly combines two components to achieve faster and high-quality image generation. The first component is a regression loss, which anchors the mapping to ensure a coarse organization of the space of images, making the training process more stable. The second component is a distribution matching loss, which ensures that the probability of generating a given image with the student model corresponds to its real-world occurrence frequency.

To achieve this, DMD leverages two diffusion models that act as guides, helping the system understand the difference between real and generated images. By approximating gradients that guide the improvement of the new model using these diffusion models, the researchers distill the knowledge of the original, more complex model into the simpler, faster one. This approach bypasses the instability and mode collapse issues commonly associated with GANs.

Advantages of the Single-Step Diffusion Model

The single-step diffusion model developed by MIT researchers offers several advantages over traditional methods. Firstly, it significantly reduces computational time, making it possible to generate high-quality images 30 times faster. Tianwei Yin, an MIT PhD student mentioned:

“Our work is a novel method that accelerates current diffusion models such as Stable Diffusion and DALLE-3 by 30 times”

This acceleration opens up new possibilities for design tools, content creation, and applications in fields like drug discovery and 3D modeling, where promptness and efficacy are crucial.

The DMD-generated images also exhibit consistent performance when compared to traditional methods. On benchmarks such as generating images based on specific classes on ImageNet, DMD achieves FID scores (Fréchet inception distance) on par with those from the original, more complex models. This indicates that the quality and diversity of the generated images are preserved. Additionally, DMD demonstrates state-of-the-art performance in industrial-scale text-to-image generation.

Training and Optimization of the DMD Framework

To train the new student model in the DMD framework, MIT researchers utilize pre-trained networks from the original models. By copying and fine-tuning parameters, they achieve fast training convergence of the new model, which maintains the high-quality image generation capabilities of the original architecture. This also enables further acceleration of the creation process through other system optimizations based on the original architecture.

However, the performance of the DMD-generated images is inherently linked to the capabilities of the teacher model used during the distillation process. In the current form, using Stable Diffusion v1.5 as the teacher model, the student inherits certain limitations. For example, rendering detailed depictions of text and small faces may still present challenges. The DMD-generated images could be further enhanced with more advanced teacher models in the future.

Applications and Future Implications

The single-step diffusion model developed by MIT researchers has wide-ranging applications in various fields. For design tools, the faster image generation can enable quicker content creation, allowing designers to iterate and experiment more efficiently. In drug discovery, where time is of the essence, promptness and efficacy are crucial. The DMD framework could support advancements in this field by accelerating the generation of drug-related images and aiding researchers in visualizing molecular structures.

Moreover, the DMD framework has implications for 3D modeling, where the ability to generate high-quality images quickly is essential. By reducing the computational time required for image generation, the DMD approach can facilitate the creation of realistic 3D models in a more efficient manner.

As the DMD framework continues to evolve, there is potential for further improvements and advancements. By exploring more advanced teacher models and refining the training process, researchers can push the boundaries of single-step image generation. This could result in even faster and higher-quality AI-generated images, opening up new possibilities for real-time visual editing and other applications.

Definitions

- MIT: The Massachusetts Institute of Technology, a prestigious research university known for its cutting-edge advancements in technology and engineering.

- AI Image Generation: The process of creating visual content from textual or other forms of input using artificial intelligence algorithms, typically involving complex models like GANs or diffusion models.

- Diffusion Model: A type of generative model used in AI that progressively adds detail to an initial noise pattern to create complex images or videos, simulating a reverse diffusion process.

- 3D Modeling: The technique of developing a mathematical representation of any surface of an object in three dimensions via specialized software, widely used in various fields like gaming, architecture, and film.

- FID Scores (Fréchet Inception Distance): A metric used to evaluate the quality of images generated by AI, comparing the distribution of generated images to real images, with lower scores indicating better quality.

Frequently Asked Questions

- What breakthroughs does faster AI image generation bring to creative fields?

- Faster AI image generation dramatically reduces the time needed to produce high-quality images, allowing creators and designers to rapidly bring their visions to life. This method, particularly the DMD framework developed by MIT, facilitates quicker content creation, enabling artists to explore and iterate designs with unprecedented speed.

- How does MIT’s DMD framework enhance the speed of AI image generation?

- MIT’s Distribution Matching Distillation (DMD) framework simplifies the traditionally complex multi-step diffusion process into a single step, achieving a 30-fold increase in speed without compromising the quality of the generated images. This innovation marks a significant leap forward in making AI-assisted content creation more efficient.

- Can the DMD framework’s faster AI image generation be applied to fields outside of art and design?

- Yes, the DMD framework’s capabilities extend beyond art and design into areas like drug discovery and 3D modeling. Its ability to quickly generate detailed visualizations can aid in visualizing molecular structures for drug development and creating realistic 3D models, enhancing both scientific research and entertainment applications.

- What challenges does the DMD framework face in generating detailed images?

- While the DMD framework significantly accelerates the image generation process, it inherits limitations from the teacher model it uses. Challenges include rendering intricate details like text and small facial features, which are areas for future improvement as the technology advances.

- What future advancements are expected in faster AI image generation technologies like DMD?

- Future advancements in faster AI image generation technologies, including DMD, are expected to focus on refining the training process and exploring more advanced teacher models. These improvements aim to further enhance the speed and quality of generated images, potentially leading to real-time visual editing capabilities and broader application across various industries.