Last Updated on February 14, 2024 4:37 pm by Laszlo Szabo / NowadAIs | Published on February 14, 2024 by Juhasz “the Mage” Gabor

Nvidia’s Chat with RTX: AI Chatbots Move From Cloud to Desktop – Key Notes

- Nvidia Corp introduced Chat with RTX, allowing personalized AI assistants on PCs.

- Operates locally, ensuring privacy and reducing latency.

- Requires GeForce RTX 30 Series GPU or newer.

- Allows customization and access to local files for personalized responses.

- Utilizes RAG methods and TensorRT-LLM for improved performance.

Chat with RTX: Local Running Artificial Intelligence Chatbot

The company Nvidia Corp. has introduced a new feature called Chat with RTX, which utilizes artificial intelligence and allows users to create their own personalized AI assistant on their personal computer or laptop, instead of relying on the cloud.

This is another development shaking the field of AI by Nvidia.

The release of Chat with RTX was announced by the company today as a technology demonstration that is available for free.

This feature enables users to utilize individualized AI capabilities that reside on their own device. It also incorporates retrieval-augmented generation methods and Nvidia’s TensorRT-LLM software, all while being optimized to have minimal impact on computing resources and not affect the performance of the user’s device.

Additionally, since Chat with RTX is run on the user’s device, all conversations remain completely private, ensuring the confidentiality of their discussions with their personal AI chatbot.

Technical Requirements and Setup

Until now, ChatGPT and other generative AI chatbots have primarily operated on the cloud, relying on centralized servers powered by Nvidia’s graphics processing unit.

However, Chat with RTX changes this by allowing generative AI to be processed locally using the GPU of the user’s computer.

To utilize this feature, a laptop or PC with a GeForce RTX 30 Series GPU or a newer model, such as the recently announced RTX 2000 Ada Generation GPU, is required. Additionally, a minimum of 8 gigabytes of video random-access memory, or VRAM, is necessary.

One of the primary benefits of utilizing a chat assistant located within the user’s vicinity is the ability for users to customize it according to their preferences by selecting the type of information it can access in order to generate its responses.

Additionally, there are advantages in terms of privacy, as well as quicker response generation due to the absence of latency commonly associated with cloud-based services.

The Chat with RTX platform utilizes RAG methods to enhance its fundamental understanding by incorporating supplementary data sources, such as locally stored files on the device.

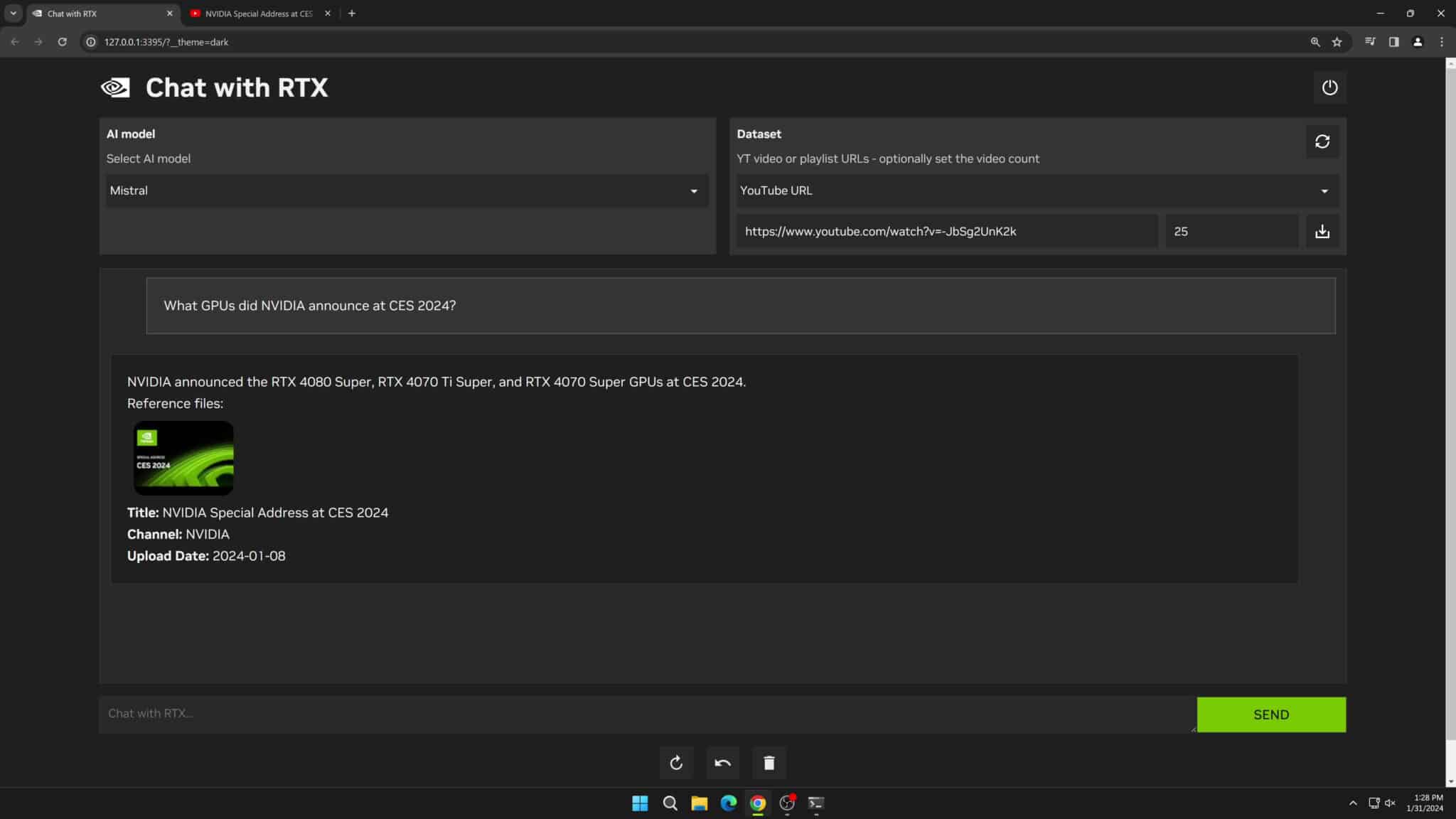

This is further boosted by the use of TensorRT-LLM and Nvidia RTX acceleration software, resulting in improved speed performance. Furthermore, users can select from various open-source LLMs, including Llama 2 and Mistral.

Performance and Technology Behind Chat with RTX

According to Nvidia, the customized assistants will have the capability to manage similar types of inquiries as those typically used for ChatGPT, such as requesting suggestions for restaurants and similar needs.

Additionally, the responses will include relevant context and links to the corresponding file from which the information was obtained.

In addition to being able to access files on a local level, Chat with RTX users will have the option to choose specific sources for the chatbot to utilize on platforms like YouTube.

This means that users can request their personal chat assistant to give travel suggestions solely based on the material from their preferred YouTubers.

To use the mentioned specifications, individuals must have Windows 10 or Windows 11 operating system and the most recent Nvidia GPU drivers installed on their computer.

The Chat with RTX feature can be tested by developers through the TensorRT-LLM RAG reference project on GitHub.

An ongoing Generative AI on Nvidia RTX competition is being held by the company, offering developers the opportunity to showcase their applications utilizing the technology. The prizes for this competition include a GeForce RTX 4090 GPU and an exclusive invitation to the 2024 Nvidia GTC conference, scheduled for March.

Frequently Asked Questions

- What is Chat with RTX?

- Nvidia’s feature enabling personalized AI assistants on PCs, ensuring privacy and customization.

- What are the system requirements for Chat with RTX?

- A GeForce RTX 30 Series GPU or newer, 8GB of VRAM, and Windows 10 or 11.

- How does Chat with RTX enhance privacy?

- By processing data locally on your device, it keeps conversations private.

- Can I customize the AI in Chat with RTX?

- Yes, users can tailor the AI’s information access and response generation.

- Where can developers test Chat with RTX?

- Through the TensorRT-LLM RAG reference project on GitHub.